Hello there,

recently I got asked a lot why we can't solve FAFs infrastructure related problems and outages with investing more money into it. This is a fair question, but so far I backed out of investing the time to explain it.

Feel free to ask additional questions, I probably won't cover everything in the first attempt. I might update this post according to your questions.

The implications of an open source project

When Vision bought FAF from ZePilot, he released all source code under open source licenses for a very good reason: Keeping FAF open source ensures that no matter what happens, in the ultimate worst case, other people can take the source code and run FAF on their own.

The FAF principles

To keep this goal, we need to follow a few principles:

- All software services used in FAF must be open source, not just the ones written for FAF, but the complementary ones as well. => Every interested person has access to all pieces.

- Every developer should be capable to run FAF core on a local machine. => Every interested person can start developing on standard hardware.

- Every developer should be capable of replicating the whole setup. => Should FAF ever close down someone else can run a copy of it without needing a master’s degree in FAFology or be a professional IT SysAdmin.

- The use of external services should be avoided as they cost money and can go out of business. => Every interested person can run a clone of FAF on every hoster in the world.

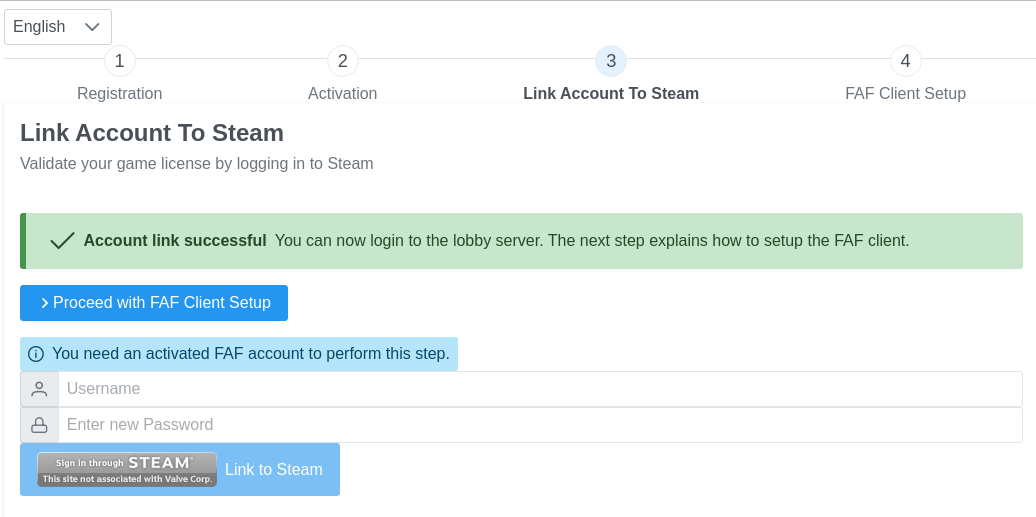

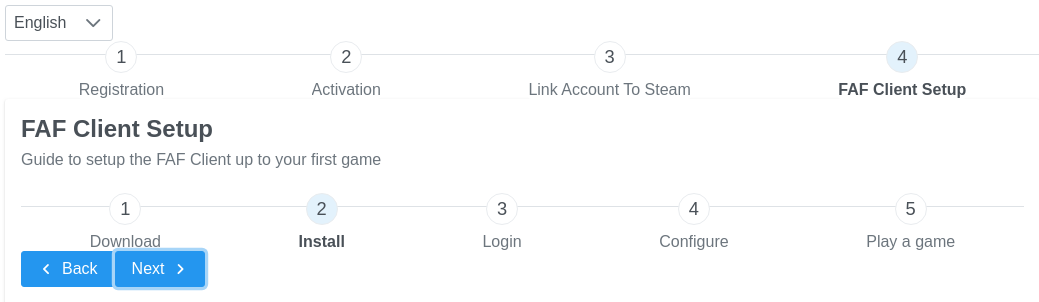

Software setup

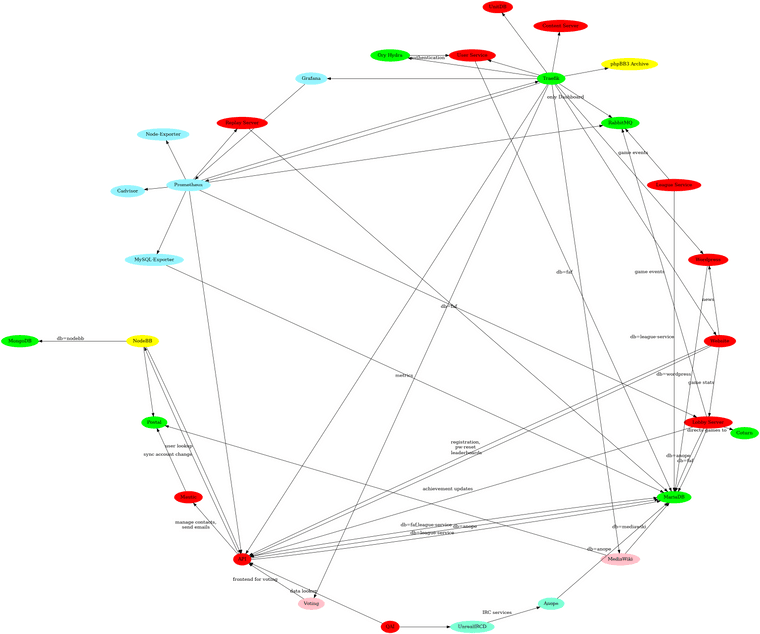

Since the beginning, but evermore growing, the FAF ecosystem has expanded and included many additional software pieces that interact with what I call the "core components".

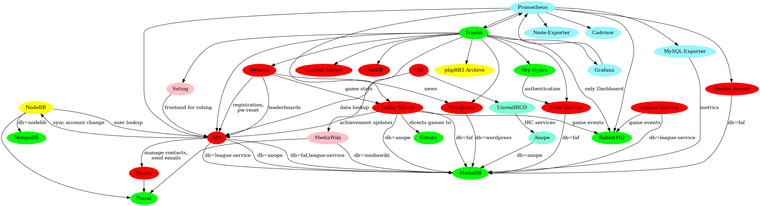

As of now running a fully fledged FAF requires you to operate 30 (!!) different services. As stated earlier due to our principles every single one of these is open source too.

As you can imagine this huge amount of different services serves very different purposes and requirements. Some of them are tightly coupled with others, such as IRC is an essential part of the FAF experience, while others can mostly run standalone like our wiki. For some purposes we didn't have any choice what to use, but are happy that there is at least one software meeting our requirements. Some software services were build in a time, when distributed systems, zero-downtime maintenance and high-availability weren't goals for small non-commercial projects. As a result they barely support such "modern" approaches on running software.

Current Architecture

The simple single

FAF was started as a one-man project as a student hobby project. It's main purpose was to keep a game alive that was about to be abandoned by its publisher. At that time nobody imagined that 300k users might register there, that 10 million replays would be stored or that 2100 user login at the same time.

From the core FAF was build to run on a single machine, with all services running as a single monolithic instance. There are lots of benefits there:

- From a software perspective this simplifies a few things: Network failures between services are impossible. Latency between services is not a problem. All services can access all files from other services if required. Correctly configured there is not much that can happen.

- It reduces the administration effort (permissions, housekeeping, monitoring, updates) to one main and one test server.

- There is only one place where backups need to be made.

- It all fits into a huge docker-compose stack which also achieves principles #2 and #3.

- Resource sharing:

** "Generic" services such as MySQL or RabbitMQ can be reused for different use cases with no additional setup required.

** Overall in the server: Not all apps take the same CPU usage at the same time. Having them on the same machine reduces the overall need for CPU performance.

- Cost benefits are huge, as we have one machine containing lots of CPU power, RAM and disk space. In modern cloud instances you pay for each of these individually.

In this setup the only way to scale up is by using a bigger machines ("vertical scaling"). This is what we did in the past.

Single point of failures everywhere

A single point of failure is a single component that will take down or degrade the system as a whole if it fails. For FAF that means: You can't play a game.

Currently FAF is full of them:

- If the main server crashes or is degraded because of an attack basically or other reasons (e.g. disk full) all services become unavailable.

- If Traefik (our reverse proxy) goes gown, all web services go down.

- If the MySQL database goes down, the lobby server, the user service, the api, the replay server, wordpress (and therefore the news on the website), the wiki, the irc services go down as well.

- If the user service or Ory Hydra goes down, nobody can login with the client.

- If the lobby server goes down, nobody can login with the client or play a game.

- If the api goes down, nobody can start a new game or login into some services, also the voting goes down.

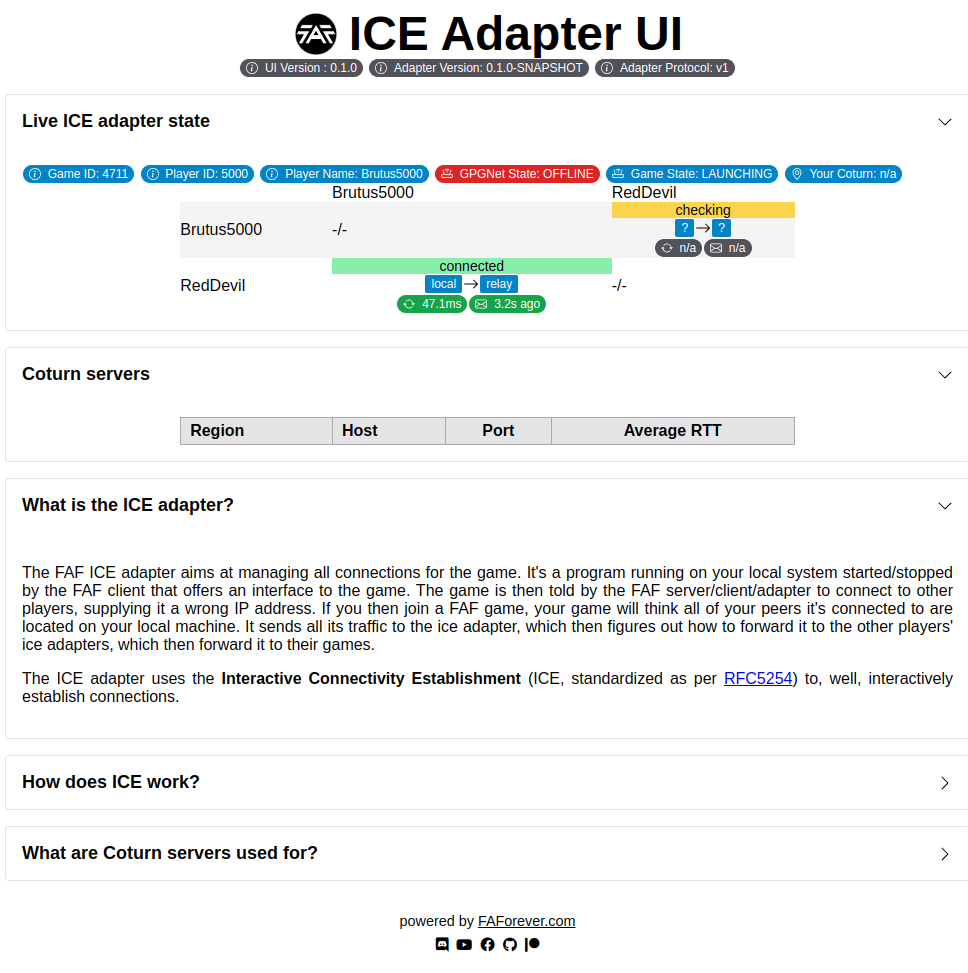

- If coturn goes down, current games die and no new games can be played unless you can live with the ping caused by the roundtrip to our Australian coturn server.

- If the content server goes down, the clients can't download remote configuration and the client crashes on launch. Connected players can't download patches, maps, mods or replays anymore.

At first, the list may look like an unfathomable catalogue of risks, but in practice the risk on a single server is moderate - usually.

Problem analysis

FAF has many bugs, issues and problems. Not all of them are critical, but for sure all of them are annoying.

Downtime history

We had known downtimes in the past because of:

- Global DNS issues

- Regular server updates

- Server performance degrading by DoS

- Server disk running full

- MySQL and/or API overloaded because of misbehaving internal services

- MySQL/API overload because of bad caching setup in api and clients

- MySQL and/or API overload because too many users online

The main problem here, is to figure out what actually causes a downtime or degradation of service. It's not always obvious. Especially the last 3 items look pretty much the same from a server monitoring perspective.

The last item is the only one that can be solved by scaling to a more to a more juicy server! And even than in many cases it's not required but can be avoided by tweaking the system even more.

I hope it comes clear that just throwing money at a more juicy server can only prevent a small fraction of downtime reason from happening.

Complexity & Personnel situation

As aforementioned, FAF runs on a complex setup of 30 different services on a docker compose stack on a manually managed setup.

On top of that we also develop our own client using these services.

Let's put this into perspective:

I'm a professional software engineer. In the last 3 years I worked on a b2b product (=> required availability Mo-Fr 9 to 5, excluding public holidays) that has 8 full time devs, 1 full time linux admin / devops expert and 2 business experts and several customer support teams.

FAF on the other hand has roughly estimated 2-3x the complexity of said product. It runs 24/7 and special attention on public holidays. It uses

We have no full time developers, we have no full time server admins, we have no support team. There are 2 people with server access who do that in their free time after their regular work. There are 4-5 recurring core contributors working on the backend and a lot more rogue ones who occasionally add stuff.

(Sometimes it makes me wonder how we ever got this far.)

Why hiring people is not an option

It seems obvious that if we can't solve problems by throwing money for a better server, then we maybe should throw money at people and start hiring them.

This is a multi-dimensional problem.

Costs

I'm from Germany and therefore I know that contracting an experienced software developer as a freelancer from west-europe working full time costs between 70k-140k€ per year.

That would be 5800€-11500€ a month. Remember: Our total Patreon income as of now is roundabout 600€.

So let's get cheaper. I'm working with highly qualified romanian colleagues and they are much cheaper. You get the same there for probably 30k € per year.

Even a 50% part time developer would exceed the Patreon income twice with 1250€ per month.

Skills and motivation

Imagine you are the one and only full time paid FAF developer. You need a huge skillset to work on everything:

- Linux / server administration

- Docker

- Java, Spring, JavaFX

- Python

- JavaScript

- C#

- Bash

- SQL

- Network stack (TCP/IP, ICE)

- All the weird other services we use

This takes quite a while and at the beginning you won't be that productive. But eventually once you master all this or even half of it, your market value has probably doubled or tripled and now you can earn much more money.

If you just came for the money, why would you stay if you can now earn double? This is not dry theory, for freelancers this is totally normal and in the regions such as east-europe this is also much more common for regularly paid employees.

So after our developer went through the pain and learned all that hard stuff, after 2,3 years he leaves. And the cycle begins anew.

Probably no external developer will ever have the intrinsic motivation to stay at FAF since there is no perspective.

Competing with the volunteers

So assume we hired a developer and you are a senior FAF veteran.

Scenario 1)

Who's gonna teach him? You? So he get's paid and you don't? That's not fair. I leave.

This is the one I would expect from the majority of contributors (even myself). I personally find it hard to see Gyle earning 1000€ a month and myself getting nothing (to be fair I never asked to be paid or opened a Patreon so there is technically no reason to complain).

Scenario 2)

Woah I'm so overworked and their is finally someone else to do it. I can finally start playing rather doing dev work.

In this scenario while we wouldn't drive contributors away, the total work done might still remain the same or even decline.

Scenario 3)

Yeah I'm the one who got hired. Cool. But now it's my job and I don't want to lose fun. Instead of working from 20:00 to 02:00 I'll just keep it from 9 to 5.

This is what would probably happen if you hired me. You can't work on the same thing day and night. In total you might invest a little more time, but not that much. Of course you are still more concentrated if it's your main job rather then doing it after your main job.

Who is the boss?

So assume we hired a developer. Are the FAF veterans telling the developer what to do? Or is the developer guiding the team now? Everybody has different opinions, but now one dude has a significant amount of time and can push through all the changes and ignore previous "gentlemen agreements".

Is it the main task to merge the pull requests of other contributors? Should he work only on ground works?

This would be a very difficult task. Or maybe not? I don't know.

Nobody works 24/7

Even if you hire one developer, FAF still runs 24/7. So there is 16 hours where theres no developer available. A developer is on vacation and not available during public holidays.

One developer doesn't solve all problems. But he creates many new ones.

Alternative options

So if throwing money at one server doesn't work and hiring a developer doesn't work, what can we do with our money?

How about:

- Buying more than one server!

- Outsource the complexity!

How do big companies achieve high availability? They don't run stuff critical parts on one server, but on multiple servers instead. The idea behind this is to remove any single point of failures.

First of all Dr. Google tells you how that works:

Dr. Google: Instead of one server you have n servers. Each application should run on at least two servers simultaneously, so the service keeps running if one server or application dies.

You: But what happens if the other server or application dies too?

Dr. Google: Well in best case you have some kind of orchestrator running that makes sure, that as soon as one app or one server dies it is either restarted on the same server or started on another server.

You: But how do I know if my app or the server died?

Dr. Google: In order to achieve that all services need to offer a healthcheck endpoint. That is basically a website that the orchestrator can call on your service, to see if it is still working.

You: But now domains such as api.faforever.com need to point to two servers?

Dr. Google: Now you need to put in a loadbalancer. That will forward user requests to one of the services.

You: But wait a second. If my application can be anywhere, where does content server read the files from? Or the replay server write them to?

Dr. Google: Well, in order to make this work you no longer just store it on the disk of the server you are running on, but on a storage place in the network. It's called CEPH storage.

You: But how do I monitor all my apps and servers now?

Dr. Google: Don't worry there are industry standard tools to scrape your services and collect data.

Sounds complicated? Yes it is. But fortunately there is an industry standard called Kubernetes that orchestrates service in a cluster on top of Docker (reminder: Docker is what you use already).

You: Great. But wait a second, can Kubernetes you even run 2 parallel databases?

Dr. Google: No. That's something you need to setup and configure manually.

You: But I don't want to do that?!

Dr. Google: Don't worry. You can rent a high-available database from us.

You: Hmm not so great. How about RabbitMQ?

Dr. Google: That has a high availability mode, it's easy to setup it just halves your performance and breaks every now and then... or you just rent it from our partner int the marketplace!

You: Ooookay? Well at least we have a solution right? So I guess the replay server can run twice right?

Dr. Google: Eeerm no. Imagine your game has 4 players and 2 of them end up on the one replay server and 2 end up on the other. So why don't you store 2 replays?

You: Well that might work hmm no idea. But the lobby server. That will work right?

Dr. Google: No! The lobby server keeps all state in memory. Depending on which one you connect to, you will only see the games on that one. You need to put the state into redis. Your containers need to be stateless! And don't forget to have a high available redis!

You: Let me guess: I can find it on your marketplace?

Dr. Google: We have a fast learner here!

You: Tell me the truth. Does clustering work with FAF at all?

Dr. Google: No it does not, at least not without larger rewrites of several applications. Also many of the apps you use aren't capable of running in multiple instances so you need to replace them or enhance them. But you want to reduce downtimes right? Riiiight?

-- 4 years later --

You: Oooh I finally did that. Can we move to the cluster now?

Dr. Google: Sure! That's 270$ a month for the HA-managed MySQL database. 150$ for the HA-managed RabbitMQ, 50$ for the HA-managed redis, 40$ a month for the 1TB cloud storage and 500$ for the traffic it causes. Don't forget your Kubernetes nodes, you need roundabout 2x 8 cores for 250$, and 2* 12GB RAM for 50$, 30$ for the stackdriver logging. That's at total of 1340$ per month. Oh wait you also needed a test server...??

You: But on Hetzner I only paid 75$ per month!!

Dr. Google: Yes but it wasn't managed and HIGHLY AVAILABLE.

You: But then you run it all for me and it's always available right?

Dr. Google: Yes of course... I mean... You still need to size your cluster right, deploy the apps to kubernetes, setup the monitoring, configure the ingress routes, oh and we reserve one slot per week where we are allowed to restart the database for upd... OH GOD HE PULLED A GUN!!